SaaS A/B Testing Examples

Want a peek into the playbook of real SaaS growth teams?

Find your next A/B testing idea inside.

📈 Get proven CRO tactics. Takes less than a minute.

“We’ve tried these ideas in our own CRO efforts. And we’ve had real success.”

"Most A/B testing ideas out there are rubbish.

A lot of it just pure SEO filler content.

How do you trust someone who’s never run a test in their life?

That’s what I realised while scouring the internet for inspiration for my own CRO efforts. It’s rare to find ideas that have actually been stress-tested and thought through with clear rationale.

That’s why I put together this website.

It’s a playbook of A/B testing ideas I’ve tried myself, working in SaaS growth teams.

It’s the kind of playbook I wish I had years ago...

If this helps you run even one better test, I’ll call that a win. Enjoy!"

Frequently Asked Questions

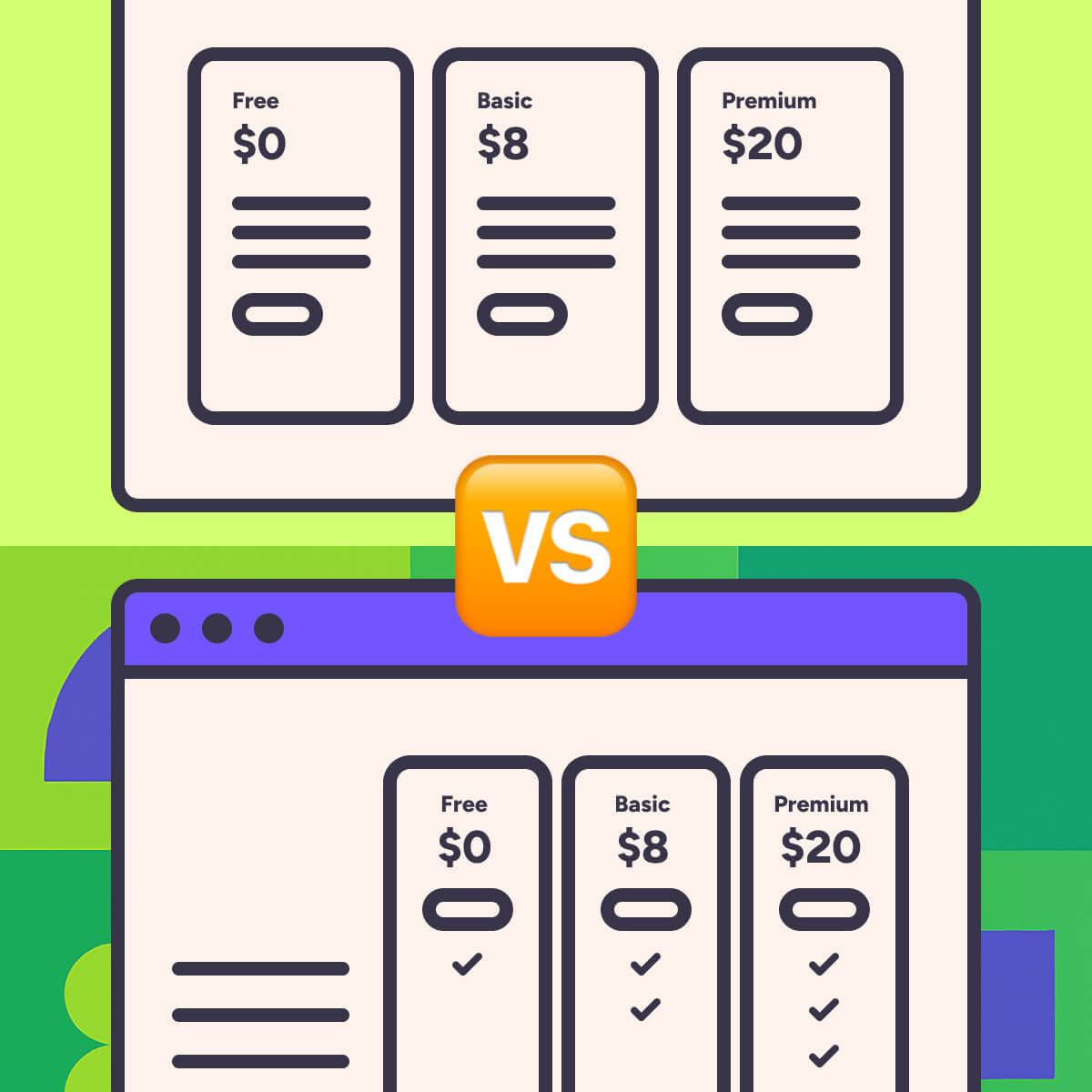

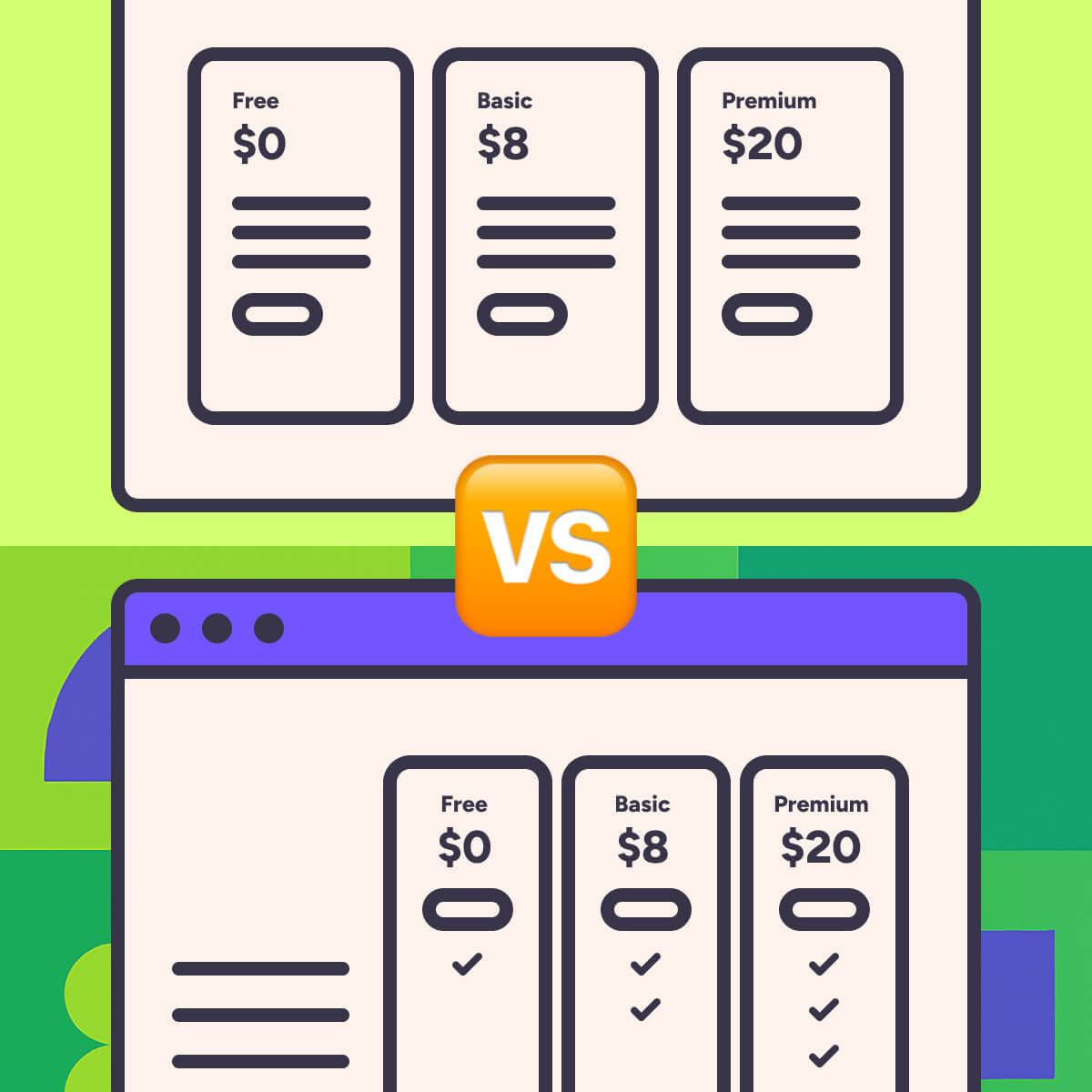

A/B testing (or split testing) is when you compare two variations of something to see which one performs better. It helps you make data-backed decisions instead of guessing what works.

It’s a practice you can apply to any part of the marketing funnel, such as:

- Landing pages

- Ads

- Emails

- Onboarding flows

- and even product features

At a birds-eye level:

- Start by defining the goal: When running A/B tests for landing pages, you’re generally looking for a conversion rate uplift of the landing page’s main purpose (like user signups or demo requests).

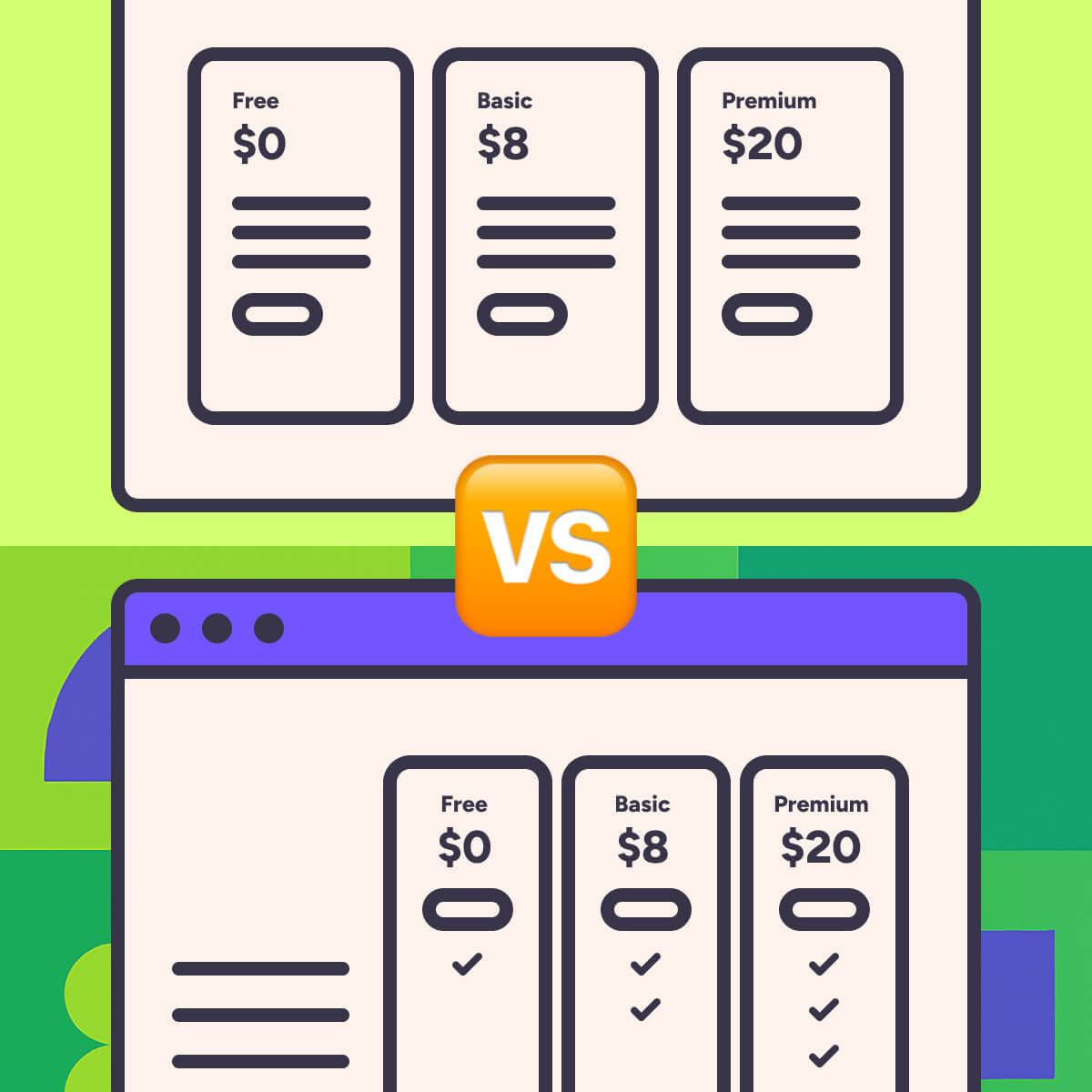

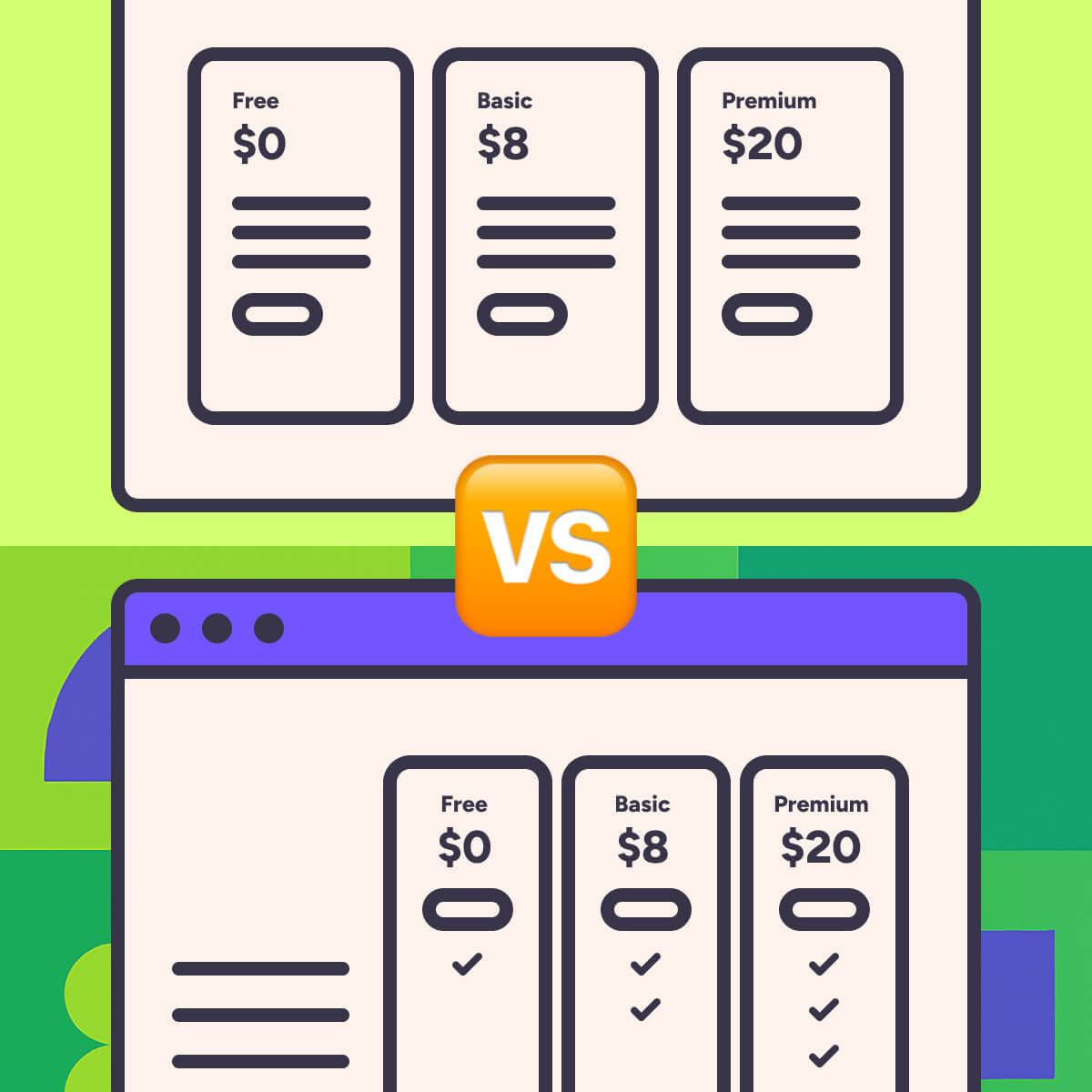

- Identify what change(s) you want to test: Ideally test one thing at a time (e.g. positioning copy, layout, CTA etc). But if you're short on time or have low traffic, testing wholesale changes is fine.

- Build a variant: Create a variation of your landing page that includes that change.

- Use A/B testing software: Split the traffic and measure performance between the original page (the “control”) and the variant. VWO is my preferred tool, but Optimizely and Crazy Egg work too.

Only implement winning variants. In theory, you could double your conversion rate simply by compounding 5 winning variants that each perform 15% better than the control.

The hard part is figuring out which ideas are high vs low impact. Use the A/B testing examples playbook for inspiration.

If you’re A/B testing with low volume (e.g. <100 user signups/month), ignore the small gains or losses.

A +3% uplift vs. control isn’t statistically significant with low volume… and might give you false positives.

You want those sweet and juicy 10%+ winners. Only stack the wins when they’re glaringly obvious.

Only stack the winning variants, if you’re doing textbook A/B testing.

However, if your test is a dead heat and the variant is directionally better or aligns with other business goals (like better brand fit or technical simplicity), then go for it.

Yes… but with an asterisk.

It’s not as clean and impartial as an actual A/B test, but it’s better than not optimising at all.

Just be aware of external factors that could skew results (seasonality, traffic source changes, etc).

Want to know the truth? In my history of A/B tests, the number of losing experiments far outnumber the winners. But you only get to the winners with persistence and iterative learning.

This A/B testing playbook is a collection that includes my most successful experiments. Use it for your inspiration.

“We’ve tried these ideas in our own CRO efforts. And we’ve had real success.”